Measure and Countermeasure of the Capsulation Attack against Backdoor-based Deep Neural Network Watermarks

摘要

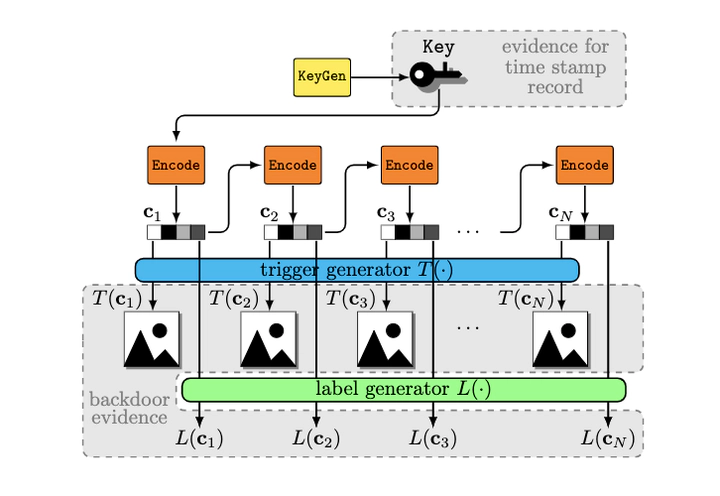

Backdoor-based watermarking schemes were proposed to protect the intellectual property of deep neural networks under the black-box setting. However, additional security risks emerge after the schemes have been published for as forensics tools. This paper reveals the capsulation attack that can easily invalidate most established backdoor-based watermarking schemes without sacrificing the pirated model’s functionality. By encapsulating the deep neural network with a filter, an adversary can block abnormal queries and reject the ownership verification. We propose a metric to measure a backdoor-based watermarking scheme’s security against the capsulation attack, and design a new backdoor-based deep neural network watermarking scheme that is secure against the capsulation attack by inverting the encoding process.