摘要

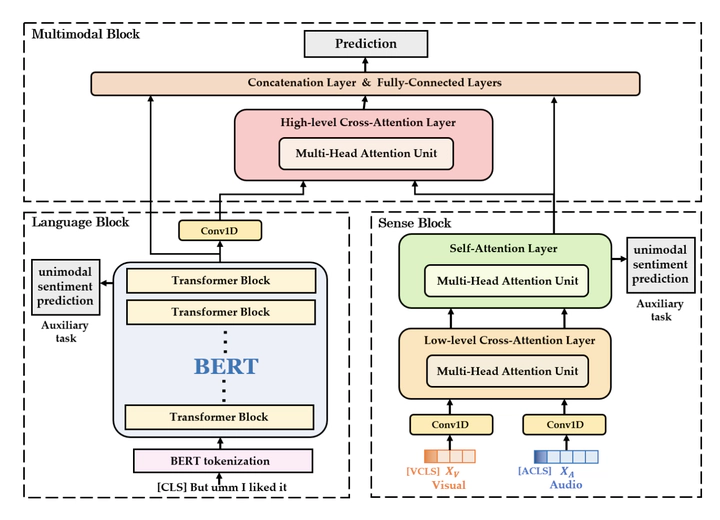

Humans convey emotions through verbal and non-verbal signals when communicating face-to-face. Pre-trained language model such as BERT can be fine-tuned to improve the performance of various downstream tasks including sentiment analysis. However, most prior works about BERT fine-tuning contains only textual unimodal data and lacks information from sense organs, such as audio and visual signals, which are crucial for sentiment analysis. In this paper, we propose Sense-aware BERT (SenBERT) which allows sense information integrated with BERT during fine-tuning. In particular, we exploit multimodal multi-head attention to capture the interaction between unaligned multimodal data. Additionally, due to the variable information richness of different modalities, multimodal network may be dominated by some modalities during training process, so we propose unimodal sentiment analysis auxiliary tasks for multi-task learning which forces the model to focus on all modalities. We conduct experiments on CMU-MOSI and CMU-MOSEI datasets for multimodal sentiment analysis. The results show the superior performance of SenBERT on all the metrics over previous baselines.