Data-Free Watermark for Deep Neural Networks by Truncated Adversarial Distillation

摘要

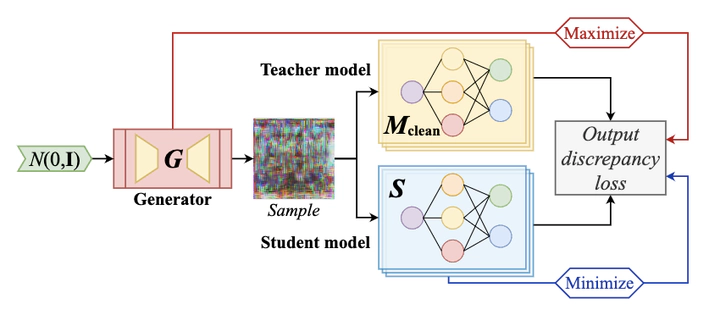

Model watermarking secures ownership verification and copyright protection of deep neural networks. In the black-box scenario, watermarking schemes commonly rely on injecting triggers and requiring the model’s training data to maintain its performance. However, such knowledge might be unavailable in commercial settings as model transactions or copyright transfers. To tackle this challenge, we propose a novel data-free black-box watermarking scheme. Our approach modifies data-free adversarial distillation to efficiently obtain a generator that produces samples serving as a substitute for the training data so the watermark can achieve high fidelity without referring to the training data.

类型

出版物

In IEEE International Conference on Acoustics, Speech and Signal Processing 2024